A bit of history

Hello everyone!

Today we’re talking about a trendy technology in our field. It’s new, but has roots in ancient time, and has even been called an art. We’re talking about Deception Technology.

The goal of deception is to manipulate our perception by exploiting our psychological vulnerabilities, which can change our beliefs, decisions, and actions [1]. Today we’re talking mostly about deception as a defensive technique, although it can certainly be used in offense as well.

Deception as a defense technique has been used since time immemorial. In the 5th century BC, Sun Tzu’s book The Art of War - (yeah, we know, Sun Tzu is sooo over-quoted, but we can’t not mention it) - he spoke of deception in wartime:

“All warfare is based on deception. Hence, when we are able to attack, we must seem unable; when using our forces, we must appear inactive; when we are near, we must make the enemy believe we are far away; when far away, we must make him believe we are near.”

But not only Sun Tzu was a visionary of deception. Julius Caesar and Hannibal - (not to be confused with Hannibal Lecter, who used deception to liven up his lunch menu) - they also used deception strategically in their battles. William the Conqueror, the Mongols, the Crusaders in the Middle Ages, Napoleon Bonaparte in the 18th century, and commanders in the World Wars are all well known for their use of deception in battle strategy.

We could spend hours talking about military deception, but we are going to talk about one story in particular, The Ghost Army. This story has all the nuances that we need to extrapolate it to our own wars.

Let’s put ourselves in the place: It’s 1943. The Allies decide that it is time to put an end the Nazis in Europe. The best option was to access continental Europe from Southern England across the English Channel with a large fleet - yes, the famous Normandy Landings - and gain ground until reaching Berlin. There was only one small problem: Hitler had the area well fortified… the allies had to find a way to distract him from Normandy. Therefore, they had to make the Nazis believe that they were going land at the Pas-de-Calais, 400 kilometers north. And so, Operation Fortitude and the aforementioned Ghost Army [2].

The Ghost Army was given the name of First United States Army Group (FUSAG). Instead of troops and munitions, it was made up of artists, special effects experts, script writers, audio experts and career military officers, among others. Thus, they began to build fake camps, tents without soldiers, jerrycans without gasoline, munition chests without bullets… they even used inflatable tanks and trucks, complete with hand-dug tracks behind them, just like real vehicles would leave. They reproduced all the typical sounds of a military encampment and wrote letters to the local newspapers to complain about the soldiers’ behavior. In short, they focused their efforts on creating a completely believable story that led the enemy to believe that they were really there, which was a key to the Allied victory.

Let’s skip ahead and hit the 80s, when we start to use the TCP/IP protocol in ARPANET, which makes it ARPA Internet - or as we know it today just the Internet. Here we find one of the first references to deception as a defense technique. We’re talking about the book The Cuckoo’s Egg by Clifford Stoll [3], a must for any deception nerd. Although the terms had not yet been invented, Stoll explains the use of “honeythings” like honeypots and honeydocs, to discover the attacker and his targets.

Since then, honeypots have become more and more sophisticated. But instead of going through that entire history, which is too long for today’s blog post, let’s go directly to Cyber Deception Platforms, more specifically to explore the difference between a honeypot and Deception Platforms

As we have seen a few paragraphs above, to be successful on a “mission” - which we’ll call a campaign from here on out -, we have to build a credible story so our adversary doesn’t know if she is in the real environment or not. And not only do we have to deploy a credible honeynet, we also have to monitor it and be able to extract the information when an attacker gets access. This translates into a lot of time and dedication, something we usually don’t have today.

Cyber Deception Platforms facilitate this work as they allow you to quickly create sophisticated and credible “honeythings” and integrate them (or not) into our production environment. In addition, we can completely control our entire deception infrastructure automatically, as well as extract telemetry in real-time and investigate incidents.

In short, Cyber Deception Platforms let us show the attacker a credible and attractive deception environment. As security experts, we can orchestrate our entire deception network, investigate incidents, analyze the information collected, integrate them with other security tools and much more!

Protecting Active Directory with Cyber Deception

Let’s look at an example of the use of Cyber Deception. To do this, we are going to use CounterCraft’s CyberDeception Platform [4].

Imagine that we know that sooner or later, our security will be breached if it hasn’t already. The first thing we must do is to analyze what worries us the most. Is it actors who are moving laterally across the network? Or possible specific attacks like ransomware? Or perhaps, are we interested in actors who are in a preliminary phase of recognition? Maybe we care about our cloud services or the servers we have in the DMZ. Whatever the case, we must take the time to analyze it, establish a list of priorities and create our Deception Plan.

Let’s say that our greatest concern is actors who aim to attack our Active Directory. We can protect our Active Directory (AD) using Cyber Deception.

Why have we chosen the AD for this post? For a very simple reason, AD is everything and is everywhere within an organization - and today there are very few which don’t have it.

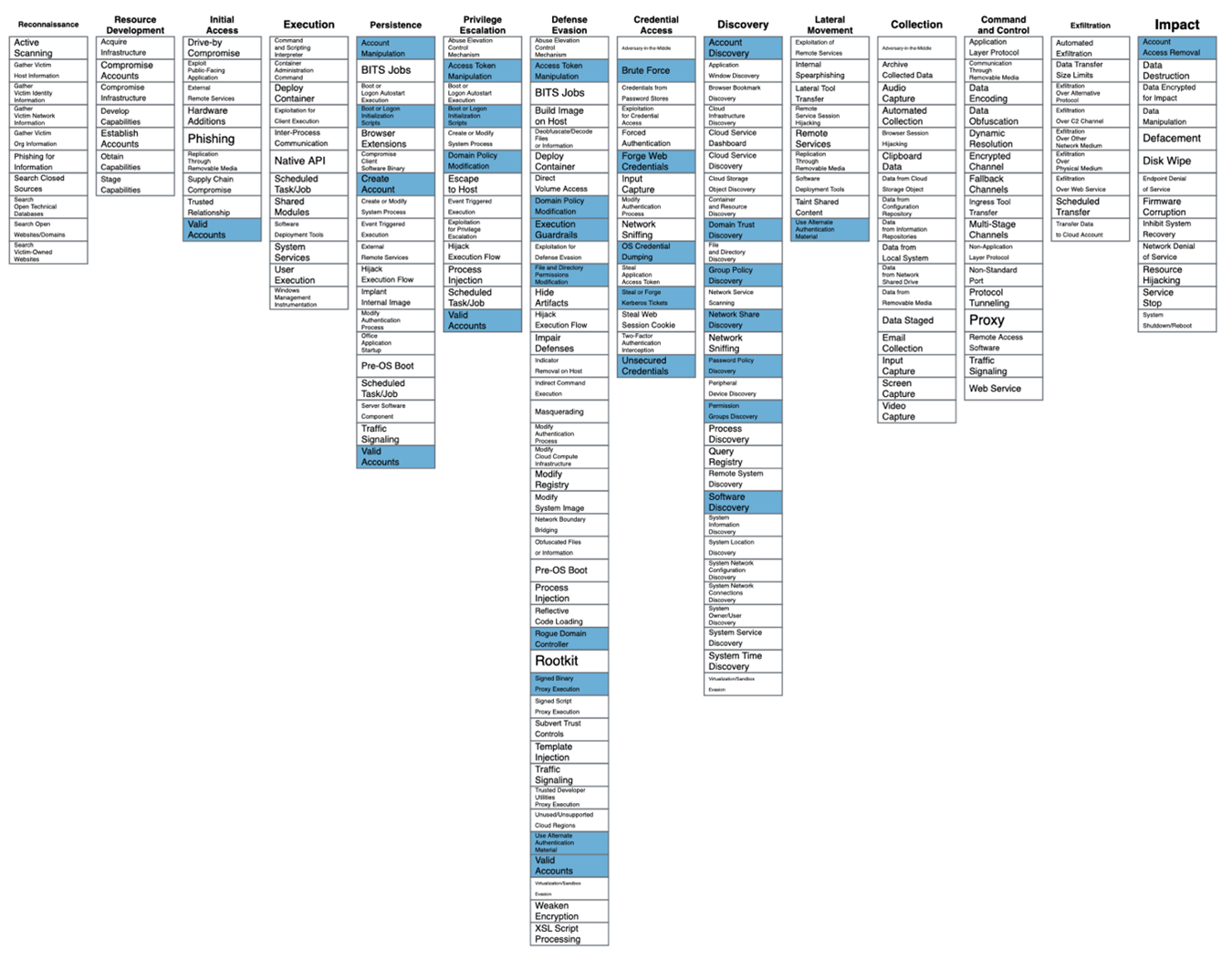

Now, in order to apply appropriate active defense techniques, first we have to know the tactics, techniques and procedures, or TTP, which we will be facing. We are going to use the well-known framework of Mitre ATT&CK[5] and Mitre Engage[6].

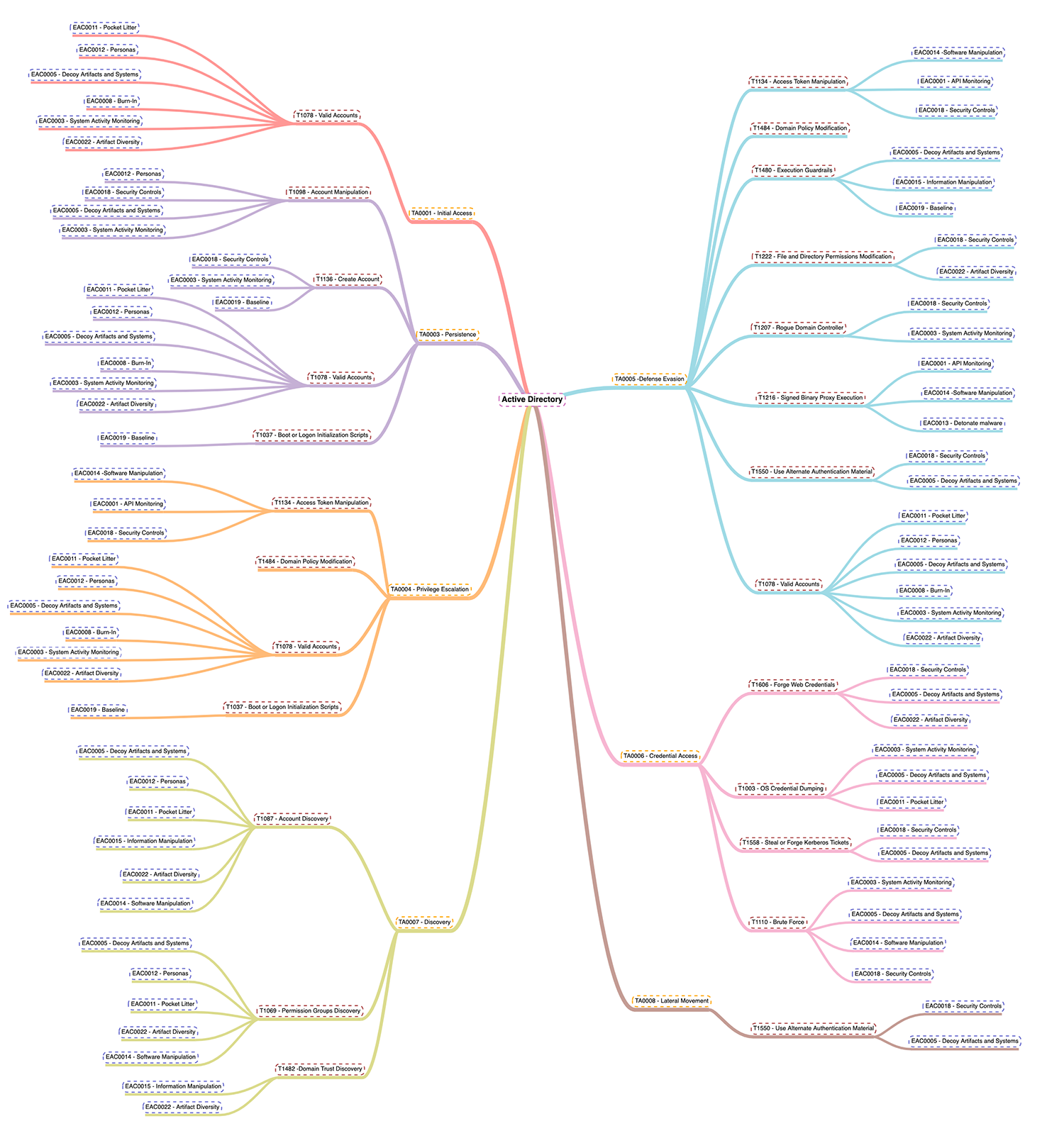

In the following image we have collected the Mitre ATT&CK techniques that are related to AD. Mitre ATT&CK is a globally-accessible knowledge base of adversary tactics and techniques based on real-world observations.

And, in the following image, the techniques of Mitre Engage (Mitre’s active defense matrix) associated with the previous Mitre ATT&CK techniques:

In summary, when an adversary attacks, they show us vulnerabilities that we can thereby take advantage of by applying the active defense techniques indicated:

Adversary Vulnerabilities [6]:

How to take advantage, active defence techniques that can be applied:

Creating a Deception campaign to protect Active Directory

Let’s make it simple: to hunt down adversaries who are trying to attack our AD, we are going to start from the hypothesis that one or more adversary(s) have compromised - or will compromise - one or more user computers. So we’re going to reduce our scope to attackers who are trying to access confidential information.

With that objective in mind, we have to first analyze our environment, then:

We already know this! It’s deception users and policies

We know this too! They’ll go in our production AD and in breached or susceptible user endpoints

Now we just need to…

Create the story

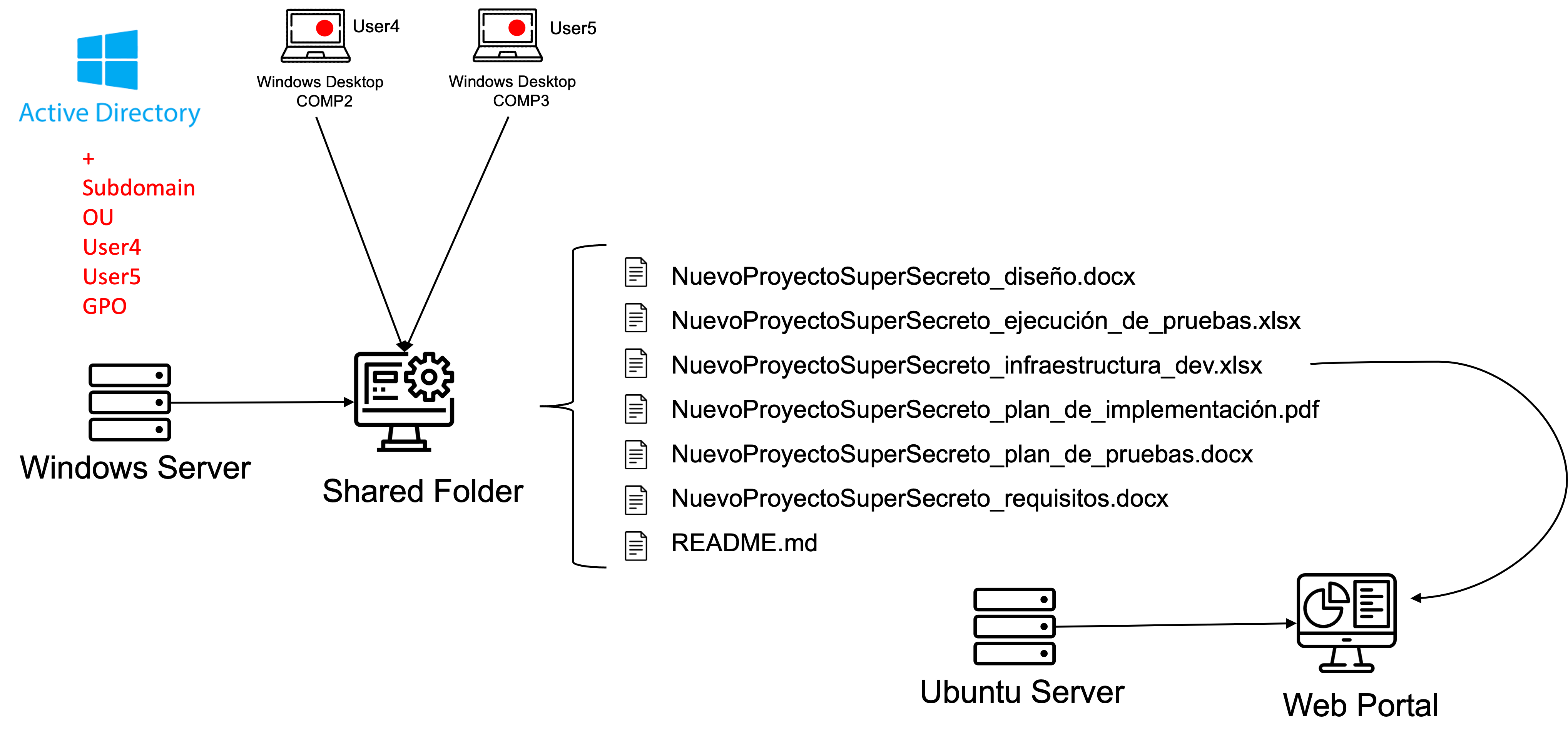

In order to detect adversaries who are trying to obtain user credentials from the active directory to access confidential information, the story is simple: we will create a new subdomain and work unit with two new users. These fictitious users are working on a confidential and attractive project for the attacker. (for example, developing some new software). These two users will have a GPO that loads a shared folder in their environment…

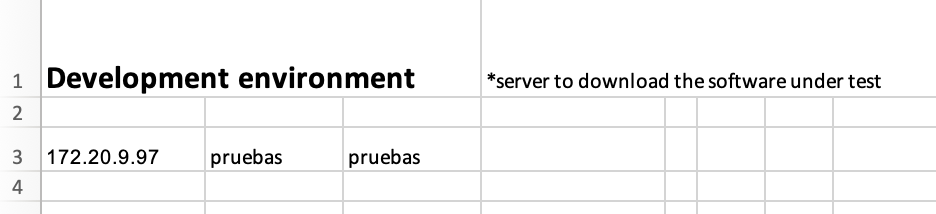

In this folder you will find several documents related to the supposed project, including information on how to access the server to test the software under development.

Therefore, to mount this environment we will need to:

Prepare the environment

Let’s go step by step. First we’ll create the subdomain, the OU, the users, the policies and the shared folder. In our case, we have created the shared folder on a Windows Server whose location is “\\SERVFOLDERS\documents”, the subdomain “secretproject.labo.deception”, the OU “NewSuperSecretProject”, the GPO “shared” to load the shared folder as a drive at startup and the users “User4” and “User5” inside the new OU.

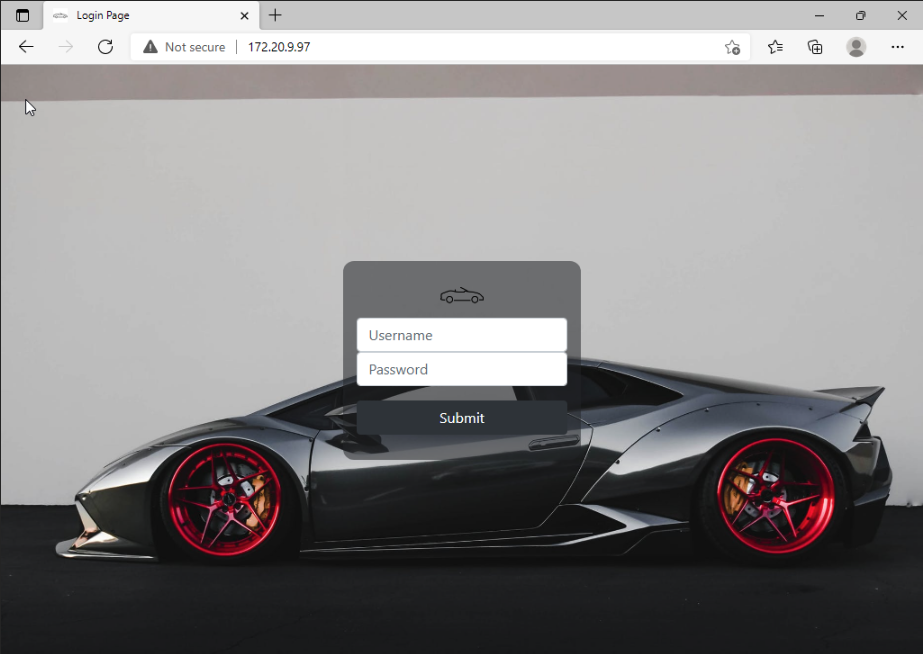

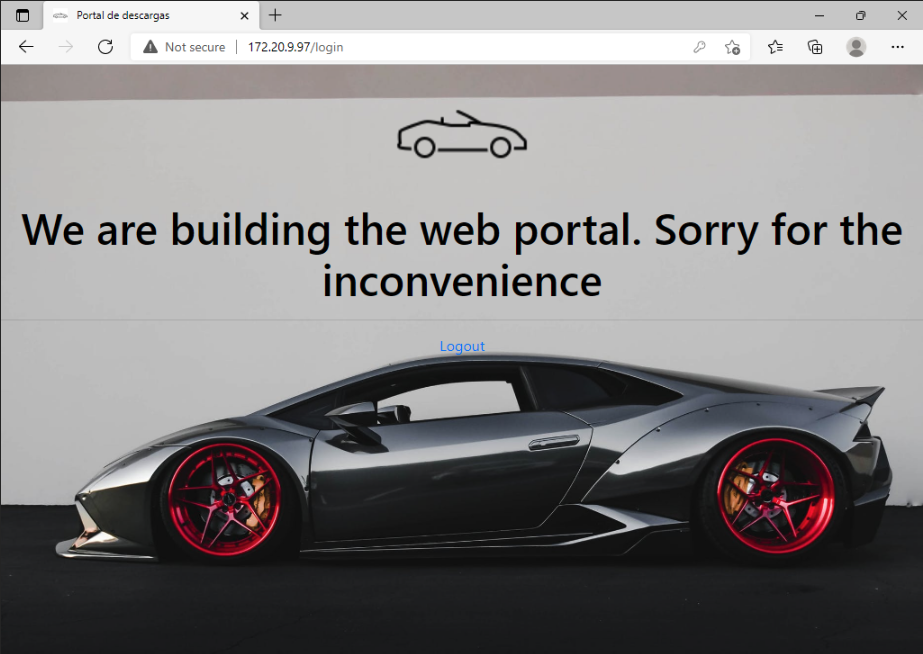

Next, we are going to deploy the server that will contain the web portal in the production network, in some believable and attractive location for the adversary. We have added a Linux machine to our lab and have deployed a web portal with a login page to download and test the supposed software. The portal is simple, if it is accessed with the correct credentials, a WIP (Work in Progress) message will appear.

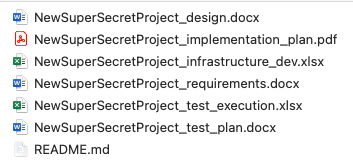

Now it’s time to create the documents that we will find in the shared folder. Since it is a software development project, in this folder we will leave several documents related to the software design, test plans, user guides and a Microsoft Excel document with information about the infrastructure where we can find the web portal for downloading the software.

Create the deception campaign

Alright! Now that we’ve got everything ready, it’s time to create our deception environment. Let’s create and orchestrate our deception assets and create the monitoring rules.

We go to the CounterCraft management console and we create a new campaign. Although it seems like our campaign has many elements, really, we only need one or two deception servers: the one that contains the portal for downloading the software and, if we have created a dedicated server for this, the server that contains the shared folder. Depending on the campaign, the shared folder may be on a production server, so no monitoring agent will be installed, but only the accesses to that folder will be controlled.

The next step is to deploy our breadcrumbs. On one hand, we are going to beaconize the documents that we will display in the shared folder (for confidentiality reasons we cannot explain here how the beacons are created).

Our shared folder has the following structure:

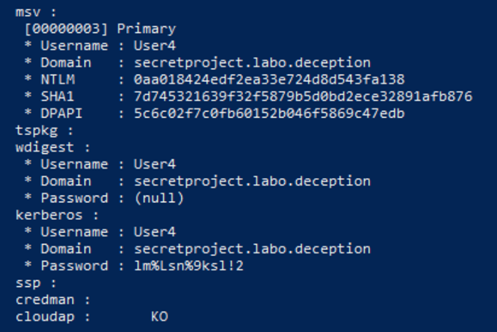

Next, we are going to inject the breadcrumbs into user’s computers that we think are compromised or that we consider likely to be compromised. To do this, we inject the credentials of User4 on computer COMP2 and User5 on COMP3 into the LSSAS.

In the following image we can see the scenario:

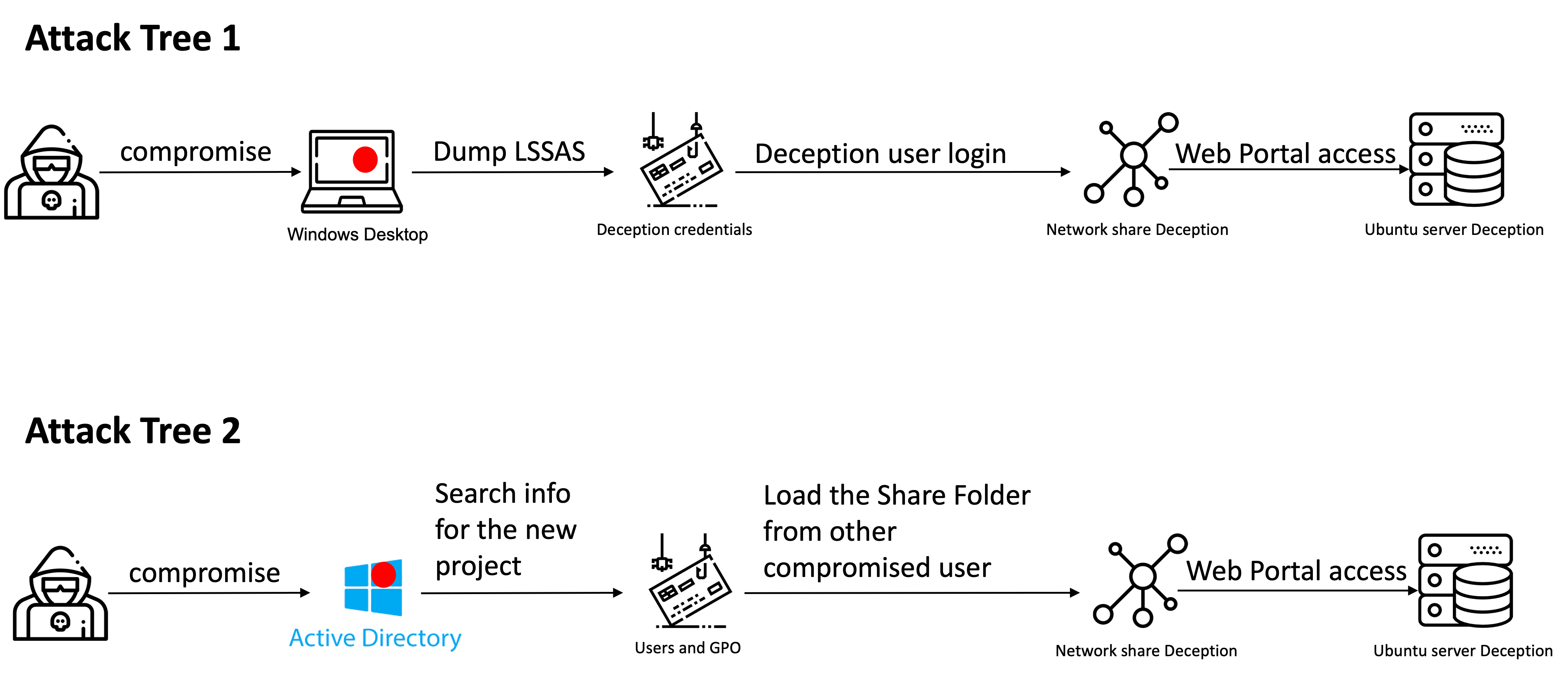

And in the following, the two attack trees that an adversary would follow:

Finally, we are going to configure the detection rules:

Campaign monitoring - adversary activity

We have it all set up! Now we just wait for our adversaries to find our deception environment, but just to prove a point, we are going to simulate the adversary’s activity.

Following Attack Tree 1, we start from the hypothesis that an adversary has compromised COMP2 of User1 by sending spear-phishing and gotten some local administrator privileges. Among other malicious activities that the attacker carries out, she dumps the LSSAS. Then she finds User4’s credentials in the “secretproject.labo.deception” domain.

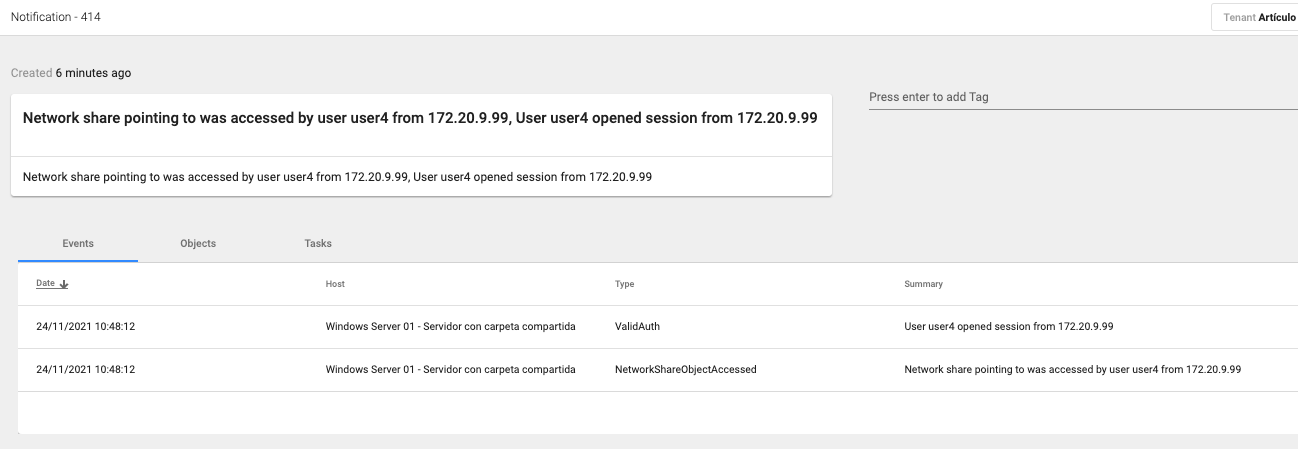

The attacker then uses these credentials to log on to the same COMP2 computer, or even tries another one. Logging in with this username loads the shared folder. The adversary finds it and accesses it.

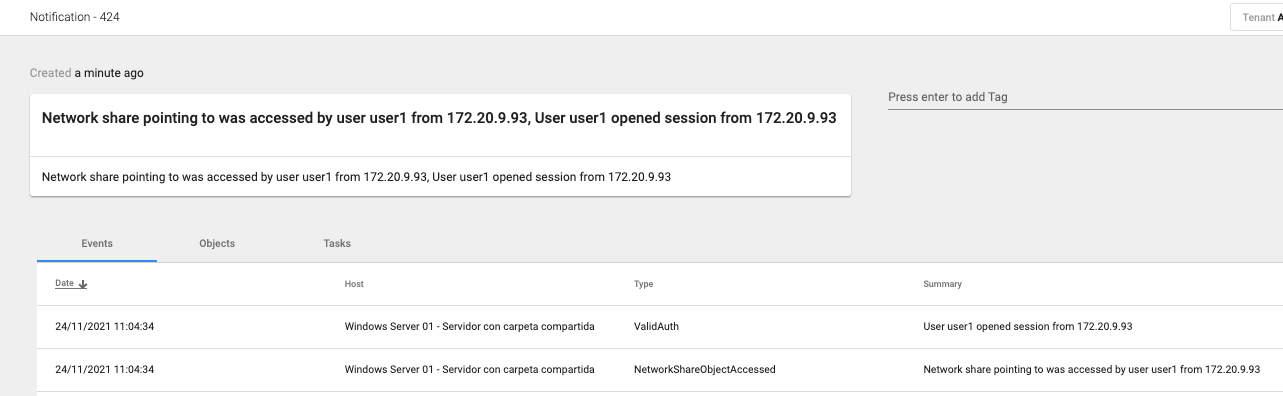

At this point, we receive notification that the shared folder has been accessed with the user User4 and the IP from where it was accessed. This tells us that the user User1 and the COMP2 computer have been compromised.

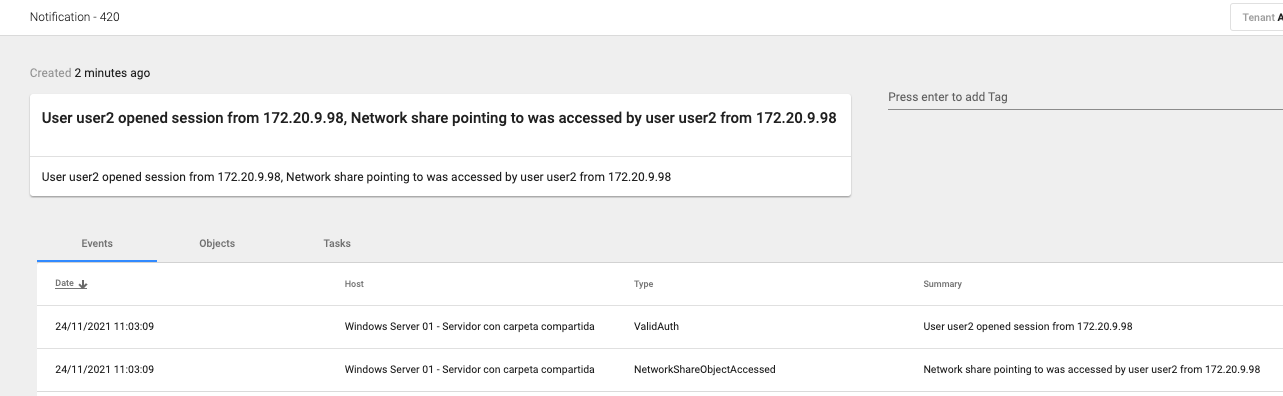

Following the Attack Tree 2, we start from the hypothesis that an adversary is carrying out reconnaissance activities and finds information about the new project: domain, OU, users, computers and the GPO that loads the shared folder.

Using a previously compromised user (different from User4 and User5) she accesses the shared folder.

At this point, we get notification that the shared folder has been accessed by User2, and the IP from where it was accessed. This tells us that User2 is compromised.

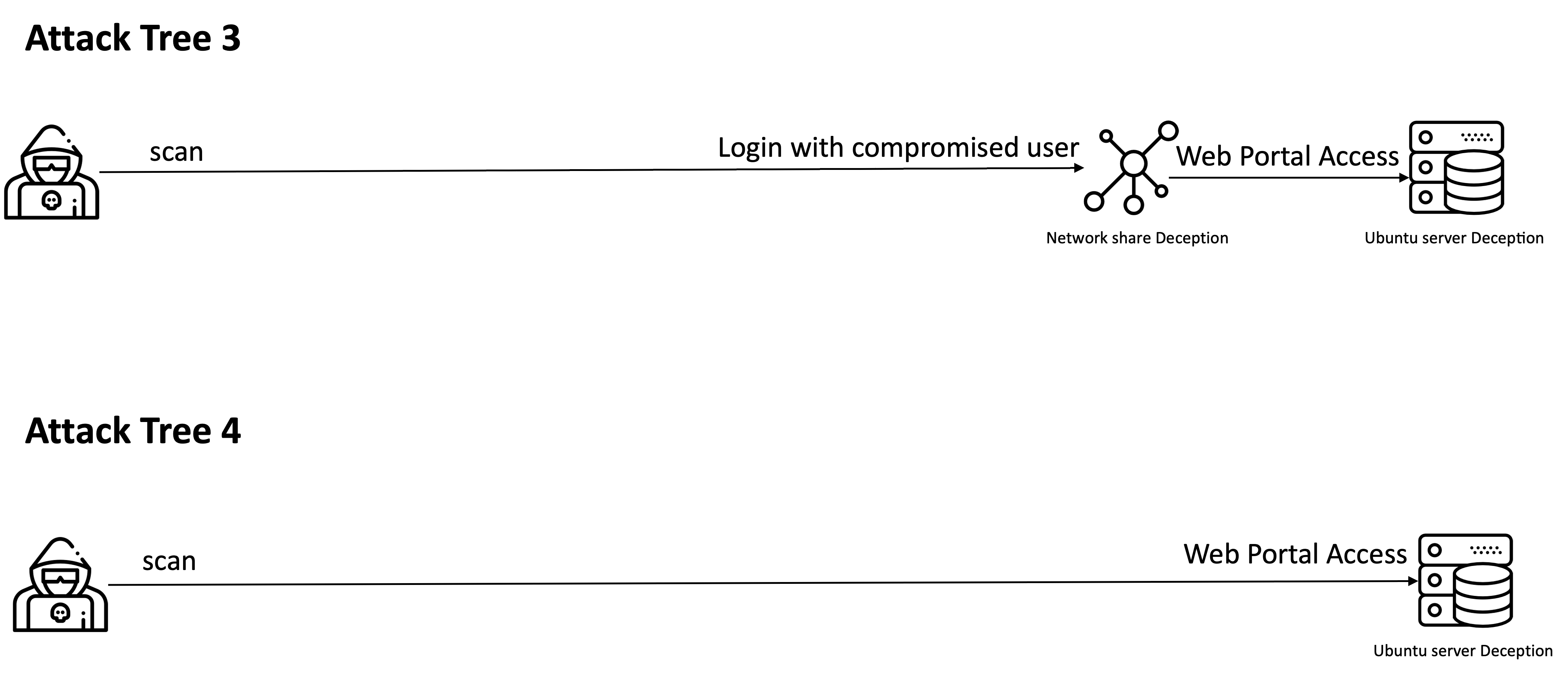

Following the Attack Tree 3, an adversary may be scanning the network where our shared folder is located and accessing it with a compromised user.

At this point, we receive notification that indicates access to the shared folder with the user User3 and the IP from where it was accessed. This tells us that the user User3 is compromised.

Here our first three Attack Trees converge. Once in the shared folder, the adversary will navigate through the documents until she finds the excel doc with information from the portal for downloading the software under test.

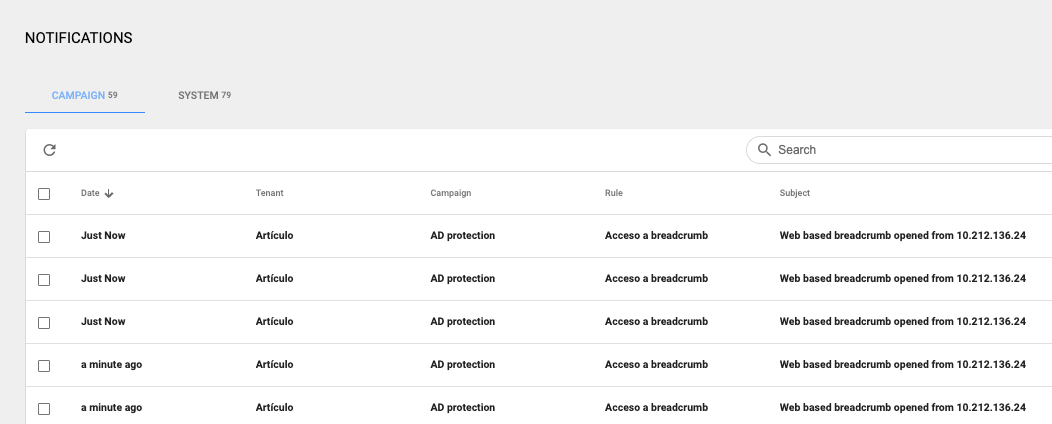

Meanwhile, we will receive notifications that tell us to open these documents:

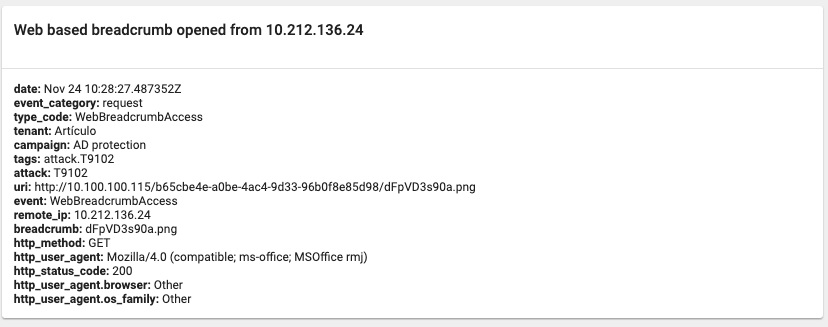

If we open the notifications, we can see more information about the event, such us the user-agent and the breadcrumb accessed:

The next step that the adversary takes is to access the web portal:

and enters the credentials found in the excel document. Once logged in, she will see the WIP - Work in Progress message.

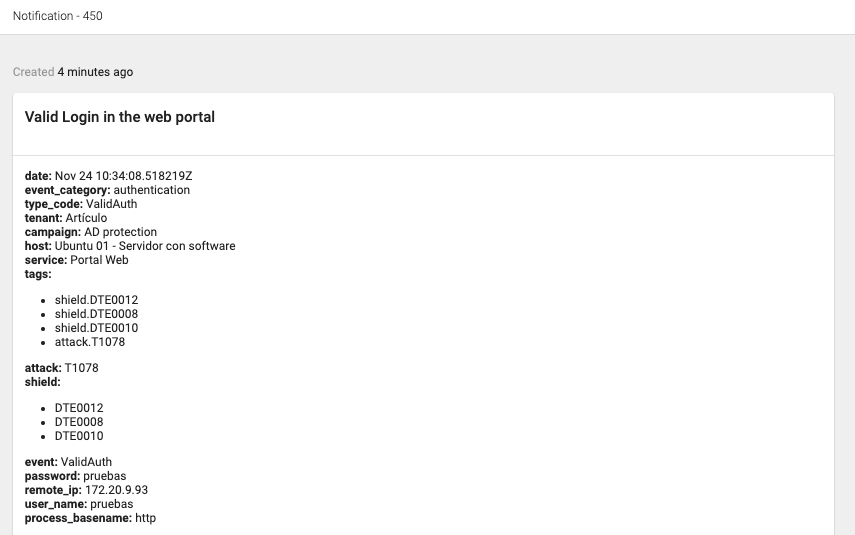

Meanwhile, we get notification of the valid login:

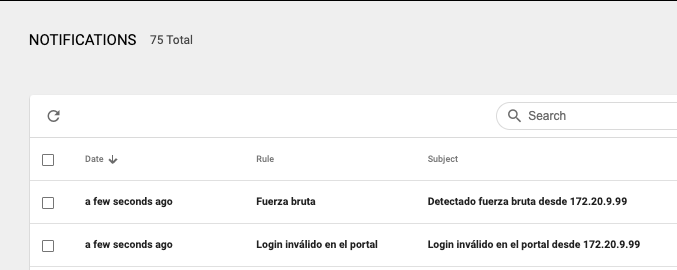

Now let’s take a look at the last Attack Tree. An adversary is doing a scan of the network where our deception web portal is located and trying to access through brute force.

We receive notifications of all the activity and information about the users and passwords that are being used, the user-agent, etc.

To sum up…

We are going to highlight three main points that, by just creating a few deception assets, we have achieved:

But this is just a small example of what we can do with deception platforms.

We can inject deception credentials in bulk into user computers and monitor their use from other security tools. Any use by those users will be illegitimate and, therefore, will be an indicator that the computer where that breadcrumb is located is compromised.

We can create campaigns to protect our DMZ, to protect ourselves from attacks in the reconnaissance phase, campaigns focused on the Dark Web, campaigns for OT, IoT, …, in conclusion: the only limit is our imagination… and, of course, our resources.

Deception Specialist & Threat Hunter

Entelgy Innotec Security

[1] https://arxiv.org/pdf/2104.03594.pdf

[2] https://military.wikia.org/wiki/Military_deception#cite_note-Handel215-10

[3] https://books.google.es/books/about/The_Cuckoo_s_Egg.html?id=9B1RfCAar2cC&printsec=frontcover&source=kp_read_button&hl=en&redir_esc=y#v=onepage&q&f=false

[4] https://www.countercraftsec.com/

[5] https://attack.mitre.org/

[6] https://engage.mitre.org/